Agentic AI represents a significant leap forward in the field of artificial intelligence, moving beyond static models that merely process data to systems capable of independent action, planning, and self-correction. This paradigm shift positions AI not just as a tool for analysis but as an active participant capable of achieving complex goals in dynamic environments. Understanding Agentic AI is crucial as it underpins the next generation of intelligent systems, from advanced robotics to sophisticated automated workflow managers.

The term "agentic" fundamentally speaks to agency—the capacity of an entity to act independently and make its own choices. In the context of AI, this means building models that can formulate high-level objectives, break them down into actionable steps, execute those steps, monitor the results, and adapt their strategy if failures occur, all without constant human intervention. This capability is what distinguishes an intelligent agent from a traditional, reactive algorithm.

As we delve deeper, we will explore the architecture that makes this autonomy possible. This introduction will establish a foundational understanding of what Agentic AI is, contrasting it with previous AI forms, and setting the stage for an examination of the critical components that allow these digital entities to operate effectively in the real world.

What Exactly is Agentic AI?

Agentic AI refers to artificial intelligence systems designed with the inherent capability to operate autonomously toward a defined set of goals. Unlike the large language models (LLMs) that dominated recent discourse, which excel at generating content based on prompts, an agentic system is built to act on that generation. It possesses the necessary scaffolding to interface with external tools, maintain a persistent memory, and navigate complex decision trees to solve problems in a multi-step fashion.

The key differentiator here is the concept of proactive execution. A standard AI might tell you the best route to a destination; an Agentic AI will book the necessary transport, monitor for delays, and automatically rebook if a flight is canceled, all based on its initial objective. This shift moves the burden of orchestration from the human user to the AI system itself, demanding higher reliability and a more robust internal reasoning loop.

This autonomy is usually structured around a continuous loop of perception, planning, action, and reflection. The system perceives its current state (via sensors or data feeds), plans the next best move, executes an action using its available tools (like APIs or software commands), and then reflects on the outcome to refine its subsequent plan. This iterative process is the engine driving genuine artificial agency.

Core Components of Autonomous Agents

The functionality of any robust Agentic AI hinges on several interconnected core components that work in concert to enable complex behavior. The first and arguably most crucial component is the Reasoning Engine, often powered by a sophisticated LLM. This engine is responsible for high-level strategic thinking—interpreting the goal, deciding which sub-tasks are necessary, and formulating the logical sequence for execution. It acts as the system’s "brain."

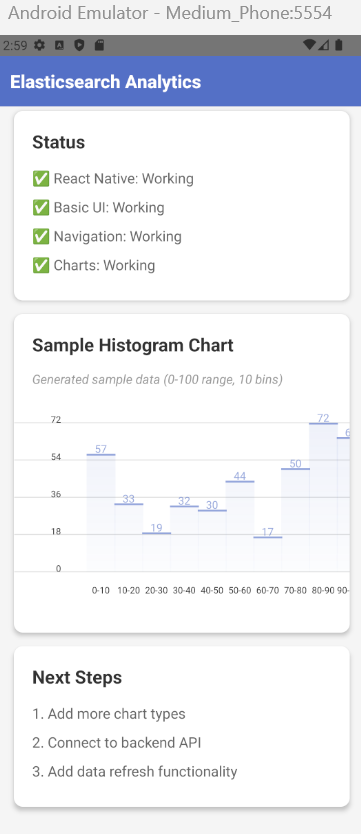

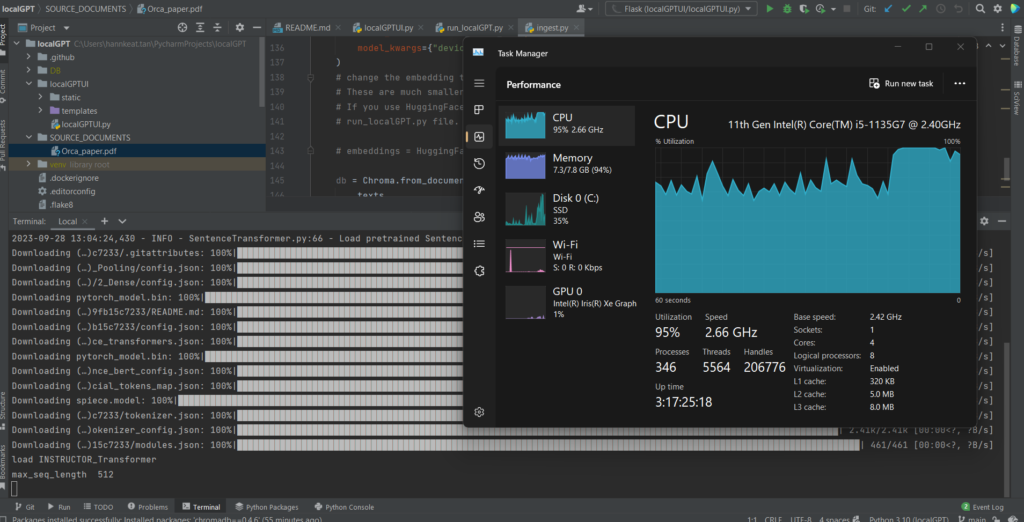

Secondly, an agent requires Memory and Context Management. This involves both short-term memory (the immediate context of the current task, often managed via the LLM’s context window) and long-term memory (a persistent knowledge base, frequently implemented using vector databases). This memory allows the agent to recall past actions, learned successes or failures, and maintain a consistent understanding of the overall mission over extended periods, preventing repetitive errors.

Finally, the third essential pillar is Tool Use and Action Execution. An intelligent agent is only as capable as the tools it can wield. This component includes the interface mechanisms that allow the agent to interact with the external world, whether that means running code, querying databases, sending emails, or controlling physical hardware. The agent must possess an accurate catalog of its available tools and the ability to correctly format the necessary calls (tool-use prompting) to achieve the intended action, closing the feedback loop necessary for true agency.

Agentic AI is not just an incremental upgrade; it represents a fundamental paradigm shift toward truly autonomous systems capable of sustained, goal-oriented behavior. By integrating advanced reasoning engines with robust memory systems and reliable tool-use capabilities, we are building digital entities that move from being passive responders to active problem-solvers. While challenges remain concerning safety, alignment, and error handling, the core components discussed—reasoning, memory, and action—provide the blueprint for the next wave of intelligent automation that promises to redefine productivity across industries.